acK: know your pipeline

Toward near-real-time monitoring of pipelines

Craig is a doctoral candidate in Electrical Engineering at the Colorado School of Mines, researching vendor-neutral methods for aligning pipeline inspection data. His work includes an objective minimization algorithm for ILI signal matching, two ILI box matching algorithms (Bayesian and transductive), and an ILI weld matching algorithm that uses odometry error. He holds a Master’s in Computer Science from CSM and a Bachelor’s in Mechanical Engineering from Georgia Tech.

-

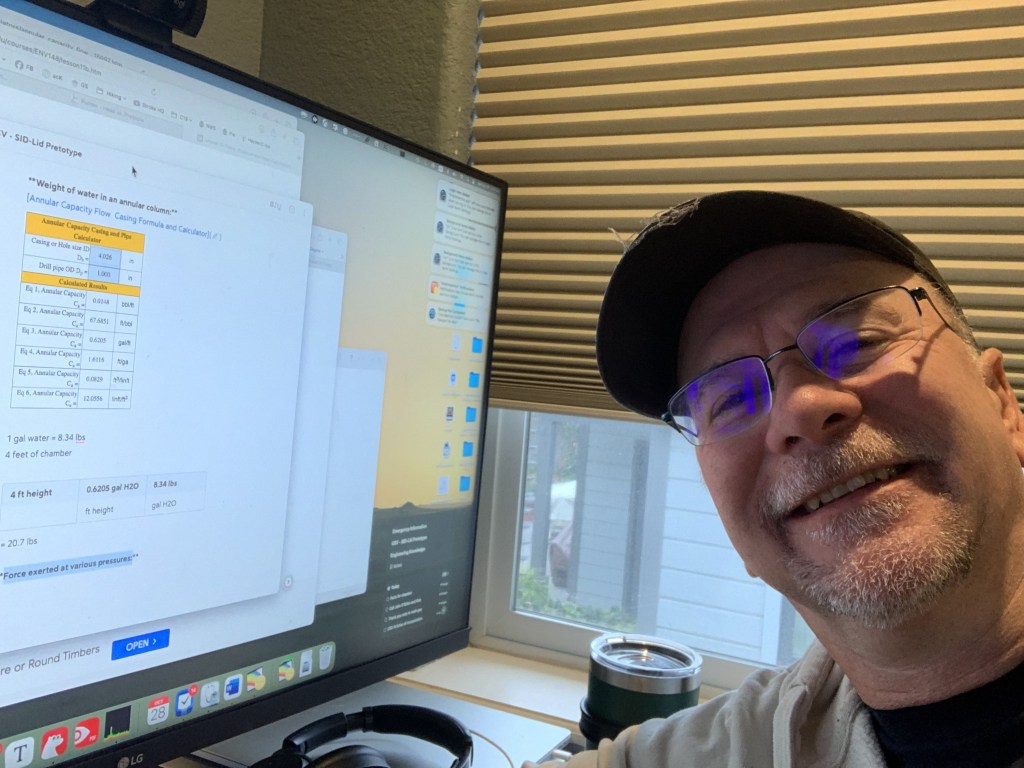

Calculating small distances between lat/long coordinates using Excel

*(Technical)* The most common GCS distance calculation, the Spherical Law of Cosines (SLoC), gives poor results at short distances. This is an issue when calculating joint lengths using latitude/ longitude pairs. At these short distances, the spherical law of cosines has a precision of only 4.5%. In contrast, the three alternate techniques I compare with…

-

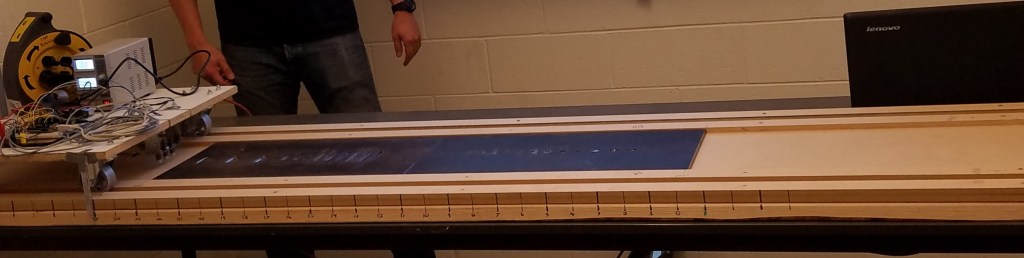

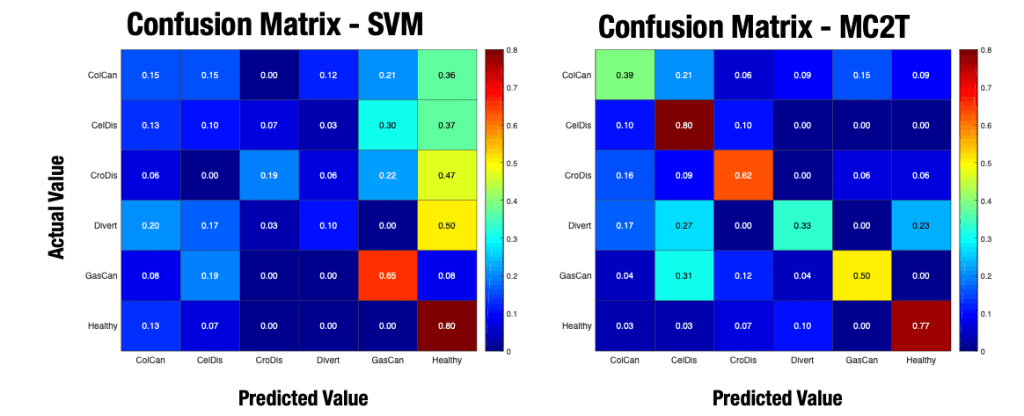

Combining sensing modalities

*(Technical)* Suppose you have had multiple sensors traverse a section of pipe with the intent of detecting axial cracking. Rather than concern ourselves with which tool does the “best” at detecting the phenomenon, we can treat the signal from each inspection as a piece of evidence supporting or refuting the hypothesis that axial cracking is…

-

A game of outliers

*(2-minute read)*Best practices are already pretty good. Best integrity management practices catch almost all cases of impending failures. What we need is a better way of catching outliers.